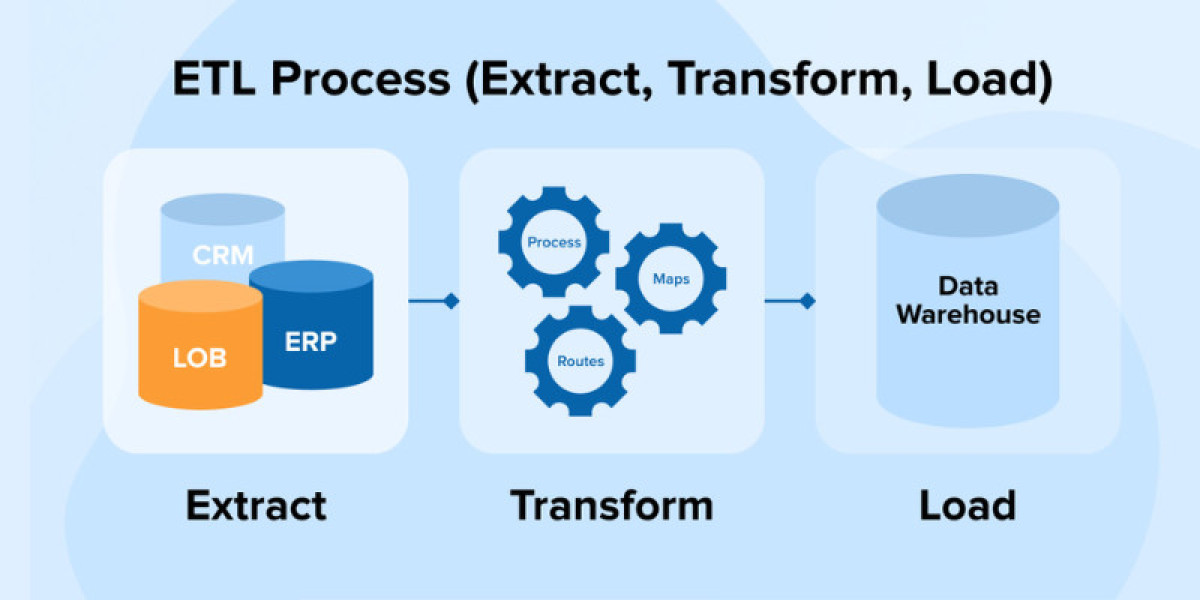

Data quality is crucial to any ETL (Extract, Transform, Load) process. As organizations deal with increasing volumes of data from various sources, ensuring that this data is accurate, consistent, and reliable becomes paramount. However, managing data quality in ETL comes with its own set of challenges. In this blog post, we'll explore some key challenges organisations face when maintaining data quality in their ETL workflows, particularly in ETL Testing Online Training offered by FITA Academy.

Understanding the Importance of Data Quality in ETL

Before delving into the challenges, it's essential to understand why data quality is crucial in the context of ETL. Data quality can lead to accurate reporting, flawed analytics, and, ultimately, incorrect business decisions. By ensuring high-quality data throughout the ETL process, organizations can maximize the value of their data assets and drive better business outcomes.

Data Integration from Heterogeneous Sources

One of the primary challenges in managing data quality in ETL is integrating data from heterogeneous sources. Organizations often deal with data from various systems, databases, and formats, each with its own schema and data quality standards. Ensuring consistency and accuracy across these disparate sources requires careful planning and robust data integration strategies.

Data Cleansing and Transformation

Raw data extracted from source systems may contain errors, inconsistencies, or missing values. Data cleansing and transformation are essential steps in the ETL process to address these issues and prepare the data for analysis. However, this process can be complex and time-consuming, especially when dealing with large volumes of data and diverse data formats.

Data Governance and Compliance

Maintaining data quality in ETL also involves adhering to data governance policies and regulatory compliance requirements. Organizations must maintain data privacy, security, and integrity throughout the ETL process. This includes implementing access controls, encryption, and auditing mechanisms to safeguard sensitive data and comply with industry regulations such as GDPR, HIPAA, or PCI DSS.

Monitoring and Validation

Once data has been loaded into the target system, ongoing monitoring and validation are necessary to ensure continued data quality. Organizations need to establish robust data quality monitoring processes and implement automated validation checks to detect anomalies, errors, or discrepancies in the data. Timely identification and resolution of data quality issues are critical to maintaining the integrity of the data warehouse or analytics platform.

Managing data quality in ETL poses several challenges for organizations, from integrating heterogeneous data sources to ensuring compliance with data governance policies. However, addressing these challenges is essential to unlocking the full potential of data-driven insights and decision-making. Ultimately, investing in data quality management improves the accuracy and reliability of business intelligence and enhances organizational agility and competitiveness in today's data-driven landscape, a skill set that can be enhanced through the Professional Training Institute in Chennai.